Can the machine speak? we might once have asked. Can it sing? The question is an old one; as old as Ada Lovelace, the Enchantress of Numbers herself; or even as old as the first clockwork androids to grace the opera house stages of the Enlightenment.

For half a century now, we have known that the answer is yes. Ever since Arthur C. Clarke visited a

friend at Bell Labs and chanced upon an IBM singing, "Daisy, Daisy, give me your answer do." But if this were no more than the artificial parroting of its human masters, like a dog that’ll say sausages if you wiggle its jaw in a certain way, when might we hear a machine intone songs of its very own?

Can the machine sing, as it were, in its own tongue? Recently, for perhaps the first time, I have felt that the answer might just be yes.

The idea is not new. When Lejaren Hiller and Leonard Isaacson programmed their Illiac Suite in 1956 they used information theory and Stanislaw Ulam’s ‘Monte Carlo’ method, in the hope, as they said, of finding the computer’s native language. The result was a set of string quartets that swung between baroque counterpoint and Schoenbergian dodecaphony. Their heirs will be found in the endless Chopin-esque preludes and Bach-alike chorales spun out by David Cope’s Experiments In Musical Intelligence algorithms from a yarn of Markov chains and random numbers.

Most of the spam music that is beginning to clog up online music services is still manmade. Session

players with names like The Hit Crew and Tribute to Macklemore record hundreds of soundalike cover versions in the hope that hapless browsers will mistake their knock-off for the real thing. Meanwhile, ‘personalised music’ artists, the Birthday Song Crew, boast 4,775 songs on iTunes, from ‘Happy Birthday Aariana’ to ‘Happy Birthday Zimena’, each one identical bar the proper noun addressed in the chorus.

What happens, though, when such fearless creatives cotton onto the algorithmic methods of David Cope? They will be churning out an endless grey goo of new Macklemore material, more Macklemore-ish than Macklemore themselves. The original artists will not be able to compete with their own spambot substitutes.

Just a few weeks ago, Google admitted that it no longer understands how its own ‘deep learning’ systems work. The software has outthought its programmers. James Bridle’s New Aesthetic blog was one of the first to pick up on this little titbit from the recent Machine Learning Conference in San Francisco. I talked to Bridle in 2013 about how spambots may actually help us to write new kinds of stories. He spoke in rapt tones about “the quality of language of spam and the kind of broken text you find on the internet, almost as a kind of vernacular of the network itself”.

When I asked Bridle about music, he pointed to the work of Holly Herndon as someone whose hyper-connected liminal techno bore some parallels to the kind of ‘network fictions’ that interested him. A few months later, Herndon’s name came up again when I spoke to British artist Conrad Shawcross about his Ada Project (named after Lord Byron’s daughter, the first computer programmer, Ada Lovelace). Shawcross had installed a sixteen-and-a-half foot tall tack-welding robot in the Palais de Tokyo and invited a selection of female electronic music artists (including Herndon, plus Beatrice Dillon, Mira Calix, and others) to compose music in response to its winding, sinuous movements.

Instead of merely responding to music that already existed, Shawcross wanted his robot to act as a

“primary source of inspiration” much as earlier generations of composers – from jazz to industrial

music – had responded to the rhythms of the new industrial world. “The robot is the player and the

musician is the theremin,” he said to me, “and they have to respond vocally to the machine.” But

though she edited “very meticulously” to the rhythms of the machine, Herndon’s piece in particular

sounded a million miles away from the machine aesthetics of the twentieth century. She envisaged a

future in which machines would not replace but enhance the human body, making them “go beyond

the physical limitations that we have.”

In November, I met Herndon at Le Cube, a digital arts centre in the Parisian banlieues. She told me about her fellow student at Stanford University’s Centre for Computer Research in Music and Acoustics (CCRMA), Kurt Werner, whose music is built out of layered “compression glitch”, like a version of Alvin Lucier’s ‘I Am Sitting in a Room’ in which the room whose acoustic signature finally engulfs the sound is now the virtual space of the computer. And she talked about a concert she played in New York where her laptop “just had a total breakdown … doing all of this weird digital artefacting”. After the show everyone was amazed at the extraordinary sounds they presumed she had deliberately crafted.

Finally, we found ourselves speculating about the music of a post-human future in which AIs are the dominant producers and consumers of music. “There’s a physical reason,” she averred, for the consonant sound of most human music, relating to the overtone series and the functioning of the ear. “So if it’s no longer a human-centred universe, then I could see it getting quite dissonant for human ears and maybe extremely rhythmically complex. Maybe we’re still stuck to the bpm of our pregnant mothers’ heartbeat. Maybe,” she speculated further of these potential post-Singularity sonics, “it would be in tune with the 50 hertz hum of electricity".

For her performance earlier in the evening, part of the Île-de-France’s annual Festival Némo, I’d

noticed her stroking what looked like walkman earbuds across the surface of her laptop. “They’re induction mics,” she explained. “They pick up electrical activity.” By rubbing them across the frame of her computer she was picking up otherwise hidden signals from the black box, bringing the oblique interior to the surface. “I’m giving it a voice,” she explained.

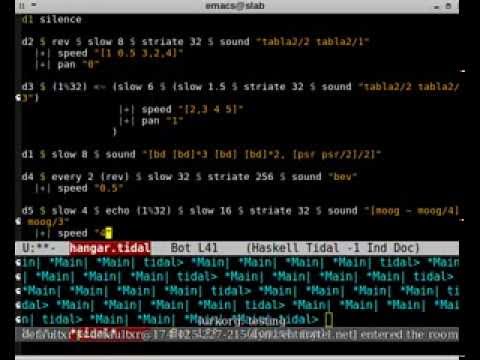

One of the things that James Bridle mentioned to me with regard to Herndon’s music was the way her use of versatile programming languages like Max/MSP makes “the machine [into] an instrument you are building even as you are playing it. Writing music while writing the code to generate that music.” This aspect of on-the-fly computational virtuosity is the specific focus of a burgeoning micro-scene that I first stumbled across earlier this year called Algorave.

A portmanteau of algorithm and rave, Algorave parties typically involve people dancing (or at least nodding appreciatively) to repetitive beats programmed live on fairly low-order functional programming languages like Haskell. The streams of alphanumeric code are projected onto a screen behind the performers, to be admired by onlookers much as a jazz aficionado might esteem the graceful fingering of a Pat Metheny or a John McLaughlin. Like a kind of fetish, the datastream comes to usurp the place of the performer as the object of the audience’s focus.

On the Algorave website, the progenitors emphasise the “alien, futuristic rhythms” introduced, as if by a sort of alchemy, by “strange, algorithm-aided processes”. “Bugs often get into the code which don’t make sense,” admits coder Alex McLean of Slub and Silicone Bake in an interview with Dazed online. “We just go with it.” The quirks of the system, its vagaries and idiosyncrasies, begin to guide the music into uncharted territories; the native zones of the digi-sonic diaspora.

That hoary old phrase ‘any sound imaginable’, which had survived unchanged from nineteenth

century orchestration treatises to the cheerleaders of twentieth century electronic music and the product catalogues of every twenty-first century audio equipment manufacturer, is now defunct. It is no longer necessarily we who are doing the imagining to which our mechanical slaves meekly respond.

When I spoke with Holly Herndon, she reminded me of the work of Goodiepal, a Faroese artist whose uniquely freakish Narc Beacon album I had played to death upon its release a little over a decade ago. More recently, apart from getting rich on the “dark side of the internet” and nuisancing

the Danish Royal Academy of Music, Goodiepal has been developing his idea of "radical computer

music". By this he means music not necessarily made by computers, but rather for consumption by computers, for the sake of intriguing and entertaining our future robot overlords.

Goodiepal, whose real name is Parl Kristian Bjørn Vester, figures that since we tend to be most fascinated by things that we find it hard to understand (love, death, the universe and so on), so too will any artificial intelligence to emerge in the next few decades. As such, his "radical computer music" is all about music that is completely unscannable by digital means. It becomes, paradoxically, a music that is uniquely human.

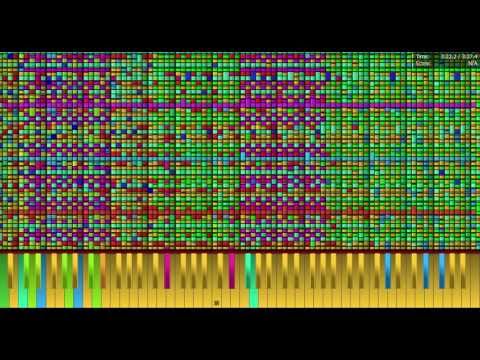

Though far from human-sounding by any traditional metric, another stream of contemporary music which pushes the scanning ability of digital systems to their limit goes by the name of Black Midi. So called for the incredible density of notes crammed onto their scores, I first happened upon the Black Midi scene thanks to an article on Rhizome in late September.

Artists use cheap software like Synthesia, intended as a vaguely Guitar Hero-ish piano tuition aid, as a means of visualising their pieces for YouTube consumption. Notes rain down on the piano keyboard like a tropical storm with some pieces averaging more than 60,000 notes a second, note counts in the millions – even billions – over the course of a few minutes. At moments of the most extreme densities, the system’s ability to represent the information it is being fed breaks down and the sound erupts into a scree of digital distortion. There is a reason why so many ‘blackers’ refer to their tunes as "impossible music".

When I described the Black Midi stuff I’d heard to Holly Herndon, she was apt to liken it to the early twentieth century cluster chords of Charles Ives, or to think of it as a kind of absurdist critique – whether deliberate or not – of the extremes of virtuosity demanded by the ‘new complexity’ music of composers like Brian Ferneyhough. The Rhizome article mentioned the unplayable player piano rolls of Conlon Nancarrow. But I was inclined to see something else, at once more novel and more strange.

As I plunged through the online rabbit hole of Black Midi wikis and YouTube channels, with their

frenzied cover versions of classic video game soundtracks, their hyperbolic boasts of lavish cpu-

busting note densities; I heard something at once comfortingly alien and peculiarly childlike. I kept

thinking this was the kind of music that the robot Johnny 5 from the Short Circuit movies would make.

I feel fairly confident that Goodiepal would not approve of the blackers’ impish approach – most of his recent releases come on oddly shaped chunks of vinyl with no digital footprint whatsoever. But in their urgent quest after impossible things, the Black Midi crew fulfil in an odd sort of way Goodiepal’s most oft-repeated demand: to give back to computer music its long lost element of utopia.